So First Thing First - What is big data?

In the era of data-driven decision-making and the proliferation of big data, the need for reliable and accurate data has become paramount. Organizations today rely on vast amounts of data to gain insights, make informed decisions, and maintain a competitive edge. This has given rise to the critical field of Big Data Testing, a specialized form of quality assurance that ensures data correctness, integrity, and performance within large datasets. In this comprehensive tutorial, we will delve into the world of Big Data Testing, exploring its significance, challenges, best practices, and tools.

Gartner defines Big Data as, “High-volume, high-velocity and/or high-variety information assets that demand cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation”.

In simple words, big data means a large volume of data. For instance, Facebook generates 4 Petabytes of data every day with 1.9 billion active users and millions of comments, images and videos updated or viewed every second.

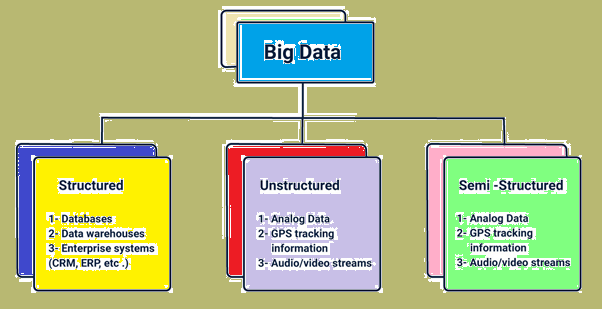

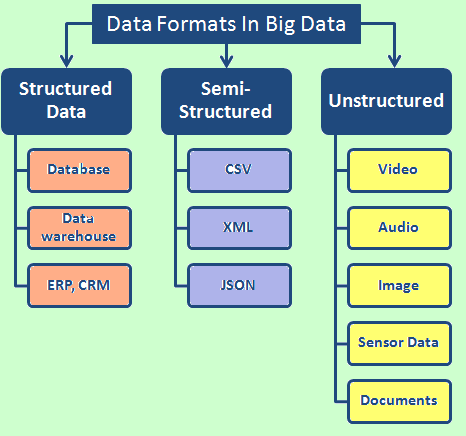

The huge collected big data can be of three formats, as:

- Structured

- Unstructured

- Semi-Structured

Structured

Unstructured

Semi-structured

- Highly organised data that can be retrieved using simple

queries. - Examples are – Database, Data warehouse ER and CRM. Data types of

predefined structure come under this category.

- It does not have any predefined format and it is difficult to

store and retrieve such type of data. - For example images, videos, word

documents, presentations, mp3 files and sensor data.

- The type of data which are no rigidly organised and contain tags

and Metadata come under these types. - Examples: XML, CSV and JavaScript Object Notation (JSON).

So What is Big Data Testing?

- Big Data Testing is a specialized process of verifying and validating the data in massive datasets, often characterized by volume, velocity, variety, and complexity.

- It ensures the quality, accuracy, and consistency of data while dealing with diverse data sources, formats, and structures.

- The primary objectives of Big Data Testing include:

- Data Accuracy: Confirming that data values are correct and consistent.

- Data Completeness: Verifying that all the expected data is present in the dataset.

- Data Consistency: Ensuring data consistency across various data sources and timeframes.

- Data Integrity: Detecting data anomalies, errors, and inconsistencies.

- Data Performance: Assessing data processing speed and efficiency.

The primary characteristics of Big Data are:

- Volume: Volume denotes the size of the data.

- Velocity: Velocity is speed at which data is generated.

- Variety: Variety specifies the types of data generated.

- Veracity: Veracity tells how trustworthy the data is.

- Value: Value gives us the ideas of how big data can be turned into a useful entity in business.

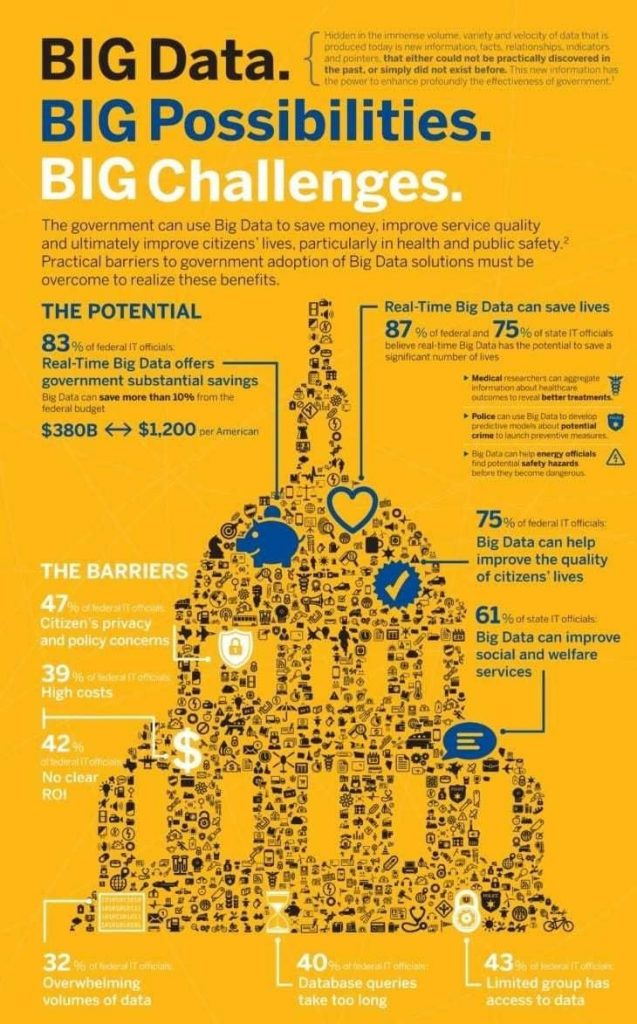

The Need for Big Data Testing

In the fast-paced digital age, data has become the lifeblood of businesses and organizations. The ability to harness and leverage massive volumes of data is a competitive advantage that fuels innovation, enhances decision-making, and optimizes operations. However, with great data comes great responsibility – the responsibility to ensure data quality, reliability, and accuracy. This is where Big Data Testing plays a pivotal role. In this article, we will explore why there is an imperative need for Big Data Testing in today’s data-driven world.

- Data-Driven Decision-Making:

In an era where data drives critical business decisions, the integrity and quality of data are non-negotiable. Decisions related to product development, marketing strategies, customer service, and resource allocation are increasingly data-driven. Flawed or inaccurate data can lead to misguided decisions, resulting in missed opportunities, financial losses, and even reputational damage.

Big Data Testing is crucial to ensure that the data underpinning these decisions is accurate, consistent, and reliable. Without rigorous testing, organizations are essentially navigating a treacherous landscape blindfolded, with the potential for costly missteps.

- Trust in Data:

Trust is a cornerstone of any data-driven organization. When executives, analysts, and data scientists cannot trust the data they work with, the foundation of decision-making crumbles. Inaccurate data can erode trust in analytics and dashboards, leading to skepticism and inefficiencies within the organization.

Big Data Testing helps maintain data trust by identifying and rectifying inaccuracies, inconsistencies, and anomalies in large datasets. When data is proven to be trustworthy, it fosters confidence in the information derived from it, ensuring that strategic decisions are based on a solid foundation.

- Compliance and Regulations:

In recent years, data privacy regulations such as GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act) have become more stringent, imposing severe penalties for data breaches and privacy violations. Ensuring compliance with these regulations is not just a matter of legal obligation but also a necessity for maintaining customer trust.

Big Data Testing plays a crucial role in ensuring data compliance. It helps identify and rectify sensitive data exposure, data leakage, and other privacy-related issues, protecting the organization from legal repercussions and maintaining customer trust.

- Data Quality and Customer Satisfaction

Data quality is directly linked to customer satisfaction. Inaccurate or incomplete customer data can lead to failed transactions, shipping errors, customer service issues, and a host of other problems that can result in lost customers and damage to a company’s reputation.

By rigorously testing and validating big data, organizations can ensure that customer information is accurate and up to date. This not only reduces operational errors but also enhances the overall customer experience.

- Business Agility:

In a rapidly changing business environment, the ability to adapt and innovate quickly is a competitive advantage. Big Data Testing helps organizations maintain business agility by ensuring that the data infrastructure is robust and can adapt to changing requirements.

Whether it’s scaling up to handle increased data volumes, integrating new data sources, or adapting to changes in data processing workflows, thorough testing ensures that the system can evolve without disrupting operations.

Demystifying 10 Types of Big Data Testing

Big Data has evolved into a fundamental driver of business growth and innovation. However, its immense volume, variety, velocity, and complexity have brought forth a myriad of challenges, particularly in ensuring the accuracy, reliability, and performance of the data. This is where Big Data Testing comes into play. To understand the nuances of this specialized field, it’s crucial to delve into the various types of testing performed in Big Data Testing. In this article, we will demystify the types of testing involved in the validation of Big Data.

1. Data Ingestion Testing

Data ingestion is the first step in the data processing pipeline, where data is collected from various sources and ingested into the big data system. Data ingestion testing focuses on verifying that the data is collected accurately, ensuring that it’s complete and uncorrupted during the ingestion process. This type of testing helps prevent data loss, corruption, or duplication at the entry point.

2. Data Storage Testing

In the big data ecosystem, data is often stored in distributed file systems and databases. Data storage testing validates that data is correctly stored and retrievable. It involves checking for data completeness, ensuring data partitioning is correct, and verifying data compression techniques. Data storage testing is vital to guarantee the integrity of data throughout its lifecycle.

3. Data Transformation Testing

Data transformation is a critical stage in data processing, where raw data is converted into a format suitable for analysis. This phase involves data cleansing, formatting, and structuring. Testing in this context focuses on ensuring that data transformation processes are applied accurately, without loss of data or alteration of data integrity. It verifies that data is transformed as intended.

4. Data Quality Testing

Data quality testing aims to assess the overall quality of the data. It involves examining data for accuracy, consistency, completeness, and correctness. This type of testing identifies and rectifies issues such as missing values, outliers, and duplicate records. Ensuring data quality is crucial for informed decision-making.

5. Data Integration Testing

Big data environments typically involve data from various sources. Data integration testing validates that data from different sources is correctly integrated into a single repository. It ensures that data relationships and dependencies are accurately maintained, and data flows seamlessly between different components of the big data system.

6. Performance Testing

Performance testing in Big Data involves evaluating the system’s capability to process data efficiently. This includes load testing, stress testing, and scalability testing. Performance testing assesses the system’s ability to handle increasing data volumes, real-time processing, and spikes in workloads without performance degradation.

7. Security and Privacy Testing

In the era of stringent data privacy regulations, security and privacy testing are paramount. It involves ensuring that sensitive data is adequately protected, and access controls are implemented. Security testing also assesses vulnerabilities, while privacy testing verifies compliance with data protection regulations like GDPR and CCPA.

8. Regression Testing

As big data systems are continually evolving, regression testing is crucial to ensure that changes or updates do not introduce new issues or negatively impact existing data quality and performance. It involves retesting to validate that data quality and processing performance remain consistent.

9. User Interface Testing

In cases where big data analytics platforms have user interfaces or dashboards, user interface testing ensures that these interfaces provide a user-friendly experience and accurately represent the data. It focuses on data visualization and usability.

10. Compliance Testing

This type of testing focuses on ensuring that the data processing and storage comply with relevant industry standards and regulations. It assesses whether the system adheres to data governance, auditing, and compliance requirements.

Challenges in Big Data Testing

- Data Volume: Big data systems typically involve terabytes or even petabytes of data, making it impractical to test the entire dataset. Testers must select appropriate samples for testing.

- Data Variety: Data in big data environments can be structured, semi-structured, or unstructured, making it difficult to define uniform testing strategies.

- Data Velocity: The speed at which data is generated and processed is often very high, requiring real-time testing capabilities.

- Data Quality: Ensuring data quality is a significant challenge due to the potential presence of dirty data, duplicate records, and missing values.

- Scalability: As data volumes grow, the testing infrastructure must be scalable to handle the increasing load.

- Complexity and Integration Problems: As big data is collected from various sources, it is not always compatible, coordinated or may not have similar formats as enterprise applications.

- Cost Challenges: For a consistent development, integration and testing of big data require for business’s many big data specialist may cost more.

- Higher Technical Expertise: Dealing with big data doesn’t include only testers but it involves various technical expertise such as developers and project managers.

Role of Tester In Big Data Testing

Before diving into the role of testers in Big Data Testing, it’s essential to grasp the nuances of big data. Unlike traditional data, big data is characterized by:

- Volume: Enormous amounts of data, often measured in terabytes, petabytes, or even exabytes.

- Variety: Data comes in diverse formats, including structured, semi-structured, and unstructured data.

- Velocity: Data is generated, ingested, and processed at high speeds, sometimes in real-time.

- Complexity: Data sources can be distributed, and processing can involve multiple technologies and systems.

The Tester’s Role in Big Data Testing

1. Test Planning and Strategy

Testers are responsible for creating a comprehensive Big Data Testing strategy. This includes understanding the objectives of the testing process, defining testing criteria, selecting appropriate testing methodologies, and establishing a clear roadmap for the testing process.

2. Data Validation

One of the fundamental responsibilities of testers in Big Data Testing is to validate the data. This involves creating validation rules and algorithms to verify data correctness and consistency. Testers must ensure that data adheres to the defined standards and requirements.

3. Data Quality Assurance

Testers play a pivotal role in ensuring data quality. They need to identify and address issues related to data accuracy, consistency, completeness, and integrity. This involves detecting and rectifying anomalies, missing values, duplicate records, and other data quality issues.

4. Performance Testing

Performance testing is crucial in the world of big data, where data processing speed can impact real-time decision-making. Testers assess the system’s ability to handle varying workloads, scalability, and efficiency. This includes load testing, stress testing, and performance optimization.

5. Data Security and Privacy

Ensuring data security and privacy is a paramount concern, particularly in light of stringent data protection regulations. Testers must validate that sensitive data is adequately protected, access controls are in place, and data is anonymized or masked to maintain privacy.

6. Regression Testing

Big data systems are constantly evolving. Testers are responsible for conducting regression testing to ensure that new changes or updates do not introduce new issues or negatively impact existing data quality and performance.

7. Collaboration and Communication

Testers collaborate closely with data engineers, data scientists, quality assurance teams, and business stakeholders to ensure alignment on testing objectives and results. Effective communication is essential for a successful Big Data Testing process.

8. Selecting and Using Testing Tools

Testers choose and utilize a range of tools and frameworks designed for Big Data Testing. Tools like Apache Hadoop, Apache Spark, and JMeter help automate testing processes, analyze data, and validate data processing efficiency.

9. Compliance Testing

In a world governed by data privacy regulations, testers play a crucial role in ensuring that the big data system complies with relevant industry standards and legal requirements. They conduct compliance testing to verify adherence to data governance, auditing, and compliance mandates.

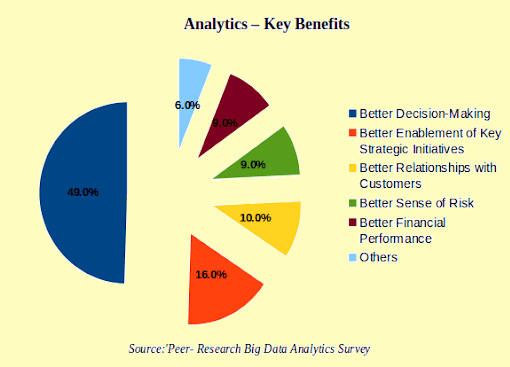

Benefits of Big Data Testing

Big Data has transformed the way organizations operate and make informed decisions. The wealth of information it provides can be a game-changer, but only if it’s reliable and accurate. This is where Big Data Testing comes into play. By systematically validating and ensuring the quality of data, Big Data Testing offers a multitude of benefits that are crucial for data-driven success. In this article, we’ll explore the advantages of Big Data Testing.

1. Data Accuracy

One of the primary benefits of Big Data Testing is the assurance of data accuracy. Ensuring that the data is correct, consistent, and trustworthy is fundamental to making informed decisions. By rigorously testing data, organizations can rely on the accuracy of the insights derived from it, reducing the risk of erroneous decisions and costly mistakes.

2. Enhanced Data Quality

Big Data Testing is instrumental in enhancing data quality. It helps identify and rectify issues such as missing values, duplicate records, outliers, and inconsistencies. By maintaining high data quality standards, organizations can provide superior customer experiences, streamline operations, and ensure the integrity of their data assets.

3. Improved Decision-Making

Reliable and accurate data is the bedrock of effective decision-making. With Big Data Testing, organizations can have confidence in the data they rely on for strategic choices. This leads to more informed decisions, better resource allocation, and the ability to spot opportunities and threats in a timely manner.

4. Data Privacy and Compliance

In a world where data privacy regulations are increasingly stringent, Big Data Testing helps organizations ensure they are in compliance. By identifying and mitigating potential data privacy and security vulnerabilities, organizations can avoid legal repercussions and maintain customer trust.

5. Optimized Performance

Performance testing in Big Data ensures that data processing jobs are efficient and timely. By assessing the system’s ability to handle varying workloads and optimizing its performance, organizations can make real-time decisions with confidence and agility.

6. Data Scalability

As data volumes continue to grow, the scalability of data systems becomes paramount. Big Data Testing helps assess and confirm that the system can handle increasing data loads effectively. It ensures that organizations can grow their data infrastructure without compromising on performance.

7. Business Agility

In a rapidly changing business environment, the ability to adapt quickly is a competitive advantage. Big Data Testing supports business agility by ensuring that the data infrastructure is robust and adaptable. It enables organizations to respond to changing requirements, integrate new data sources, and evolve their data processing workflows without disruption.

8. Cost Reduction

By identifying and rectifying data quality issues and optimizing performance, Big Data Testing can lead to cost reductions. It minimizes the operational errors that can result from inaccurate data, streamlines data processing, and prevents costly compliance violations.

9. Customer Satisfaction

High-quality data ensures that customer information is accurate and up to date. This not only reduces operational errors but also enhances the overall customer experience, leading to increased customer satisfaction and loyalty.

10. Maintaining Trust

Ultimately, Big Data Testing helps organizations maintain the trust of their stakeholders. When data is proven to be reliable and accurate, it fosters trust and confidence in the information derived from it, both internally and externally.

Big Data Testing Tools

Several tools and frameworks are available to aid in Big Data Testing. Some of the most popular ones include:

Apache Hadoop: A framework that supports distributed storage and processing of big data. Hadoop can be used for testing data processing and storage components.

Apache Spark: An open-source, distributed computing system, widely used for real-time data processing and analytics. Spark can be employed for performance testing.

Apache Kafka: A distributed streaming platform that is invaluable for testing real-time data ingestion and processing.

Hive: A data warehouse infrastructure that can be used for data validation and querying.

Selenium: While typically associated with web application testing, Selenium can also be employed for testing big data user interfaces and dashboards.

JMeter: A popular tool for load and performance testing, JMeter can be be used to evaluate the performance of big data systems.

Big Data Testing Best Practices

To overcome the challenges associated with Big Data Testing, it’s essential to follow best practices. Here are some of the key guidelines for effective Big Data Testing:

- Define Clear Testing Objectives: Clearly define the testing objectives, including what aspects of data quality and performance are critical to your organization.

- Data Sampling: Instead of testing the entire dataset, use statistical sampling techniques to select representative data subsets for testing.

- Automation: Leverage automation tools to design and execute test cases, as manual testing can be time-consuming and error-prone.

- Data Validation: Develop validation rules and algorithms to verify data correctness and consistency.

- Data Masking: Ensure sensitive data is masked or anonymized to maintain data privacy and security.

- Performance Testing: Assess the performance of data processing jobs, as latency can severely impact real-time decision-making.

- Scalability Testing: Validate whether the system can handle increased data loads and scaling demands effectively.

- Regression Testing: Continuously test data quality and performance as data sources and data processing logic evolve.

Afterthought?

Big Data Testing is a critical component in ensuring the reliability, accuracy, and performance of big data systems.

As organizations increasingly rely on data to make informed decisions, the importance of effective Big Data Testing cannot be overstated.

By following best practices, leveraging the right tools, and embracing automation, organizations can navigate the challenges of testing large and complex datasets and ultimately gain confidence in their data-driven strategies.

Good Article!!! Thanks.

Thanks for sharing this .

Thats a nice article, awesome.

Thats a nice article.